Creating a Good Security Conference CFP Submission

So you’re interested in submitting a talk for a security conference? Awesome! Above all else, what keeps our industry moving forward is the free and open sharing of information. Submitting a talk can be a scary experience, and the process for how talks are evaluated can feel mysterious.

So what’s the best way to create a good security conference CFP submission?

It’s perhaps best to consider the questions that the review board will ask themselves as they review the submissions:

- Will this presentation be of broad interest (i.e.: 10-20% of attendees?)

- Is this presentation innovative and useful?

- Is this presentation likely to be able to deliver on its outline and promises?

- Is this presentation consistent with the values of the conference?

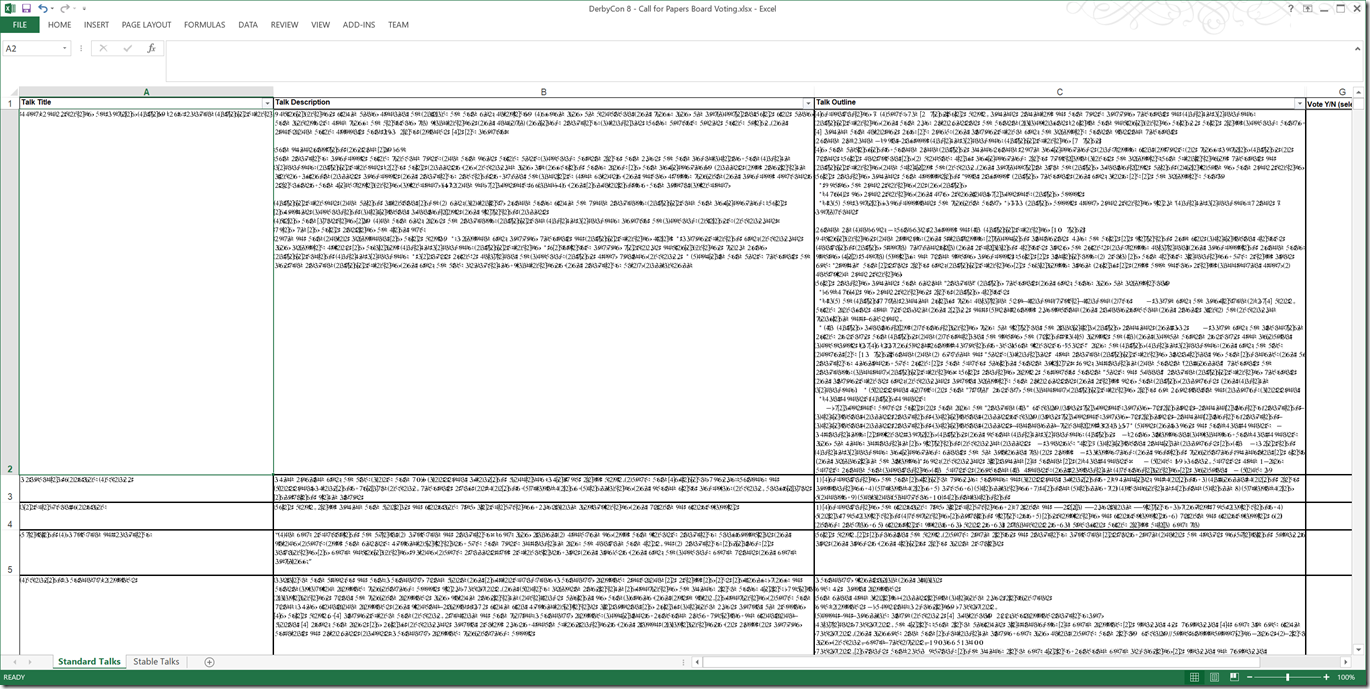

They are also likely going to review submissions in an extremely basic web application or Excel.

See that scroll bar on the right? It’s tiny. For DerbyCon this year, the CFP board reviewed ~ 500 submissions. That’s a lot of work, but it’s also an incredible honour. It’s like going to an all-you-can eat buffet prepared by some of the top chefs in the world. But that buffet is 3 miles long. It’s overwhelming, but in a good way :)

Let’s talk about how you can create a good CFP submission based on the questions reviewers are asking themselves.

Review Board Criteria

Will this presentation be of broad interest?

If a conference is split into 5 tracks, accepted presentations must be interesting to about 20% of the attendees. If your talk is too specialized - such as the latest advances in super-theoretical quantum cryptography - you might find yourself talking to an audience of 4.

A common problem in this category is vendor-specific talks. Unless the technology is incredibly common, it will just come across as a sales pitch. And nobody wants to see a sales pitch.

That said, some talks are of broad interest exactly because they are so far outside people’s day-to-day experience. While an attendee may never have the opportunity to experience the lifestyle of a spy, an expose into the life of one would most certainly have popular appeal.

Is this presentation innovative and useful?

The security industry is incredible at sharing information. For example, @irongeek records and shares thousands of videos from DerbyCon, various BSsides, and more. DEF CON has been sharing videos for the last couple of years, as has Black Hat and Microsoft’s Blue Hat. If an audience member is interested in a topic, there’s a good chance they’ve already watched something about it through one of these channels. In your CFP submission, demonstrate that your presentation is innovative or useful.

- Does it advance or significantly extend the current state of the art?

- Does it distill the battle scars from attempting something in the real world (i.e.: a large company, team, or product?)

- If it’s a 101 / overview type presentation, does it cover the topic well?

Is this presentation likely to be able to deliver on its outline and promises?

Presentation submissions frequently promise far more than what they can accomplish. For example:

- Content outlines that could never be successfully delivered in the time slot allotted for a presentation.

- Descriptions of research that is in progress that the presenter hopes will bear fruit. Or worse, research that hasn’t even started.

- Exaggerated claims or scope that will disappoint the audience when the actual methods are explained.

Is this presentation consistent with the values of the conference?

Some presentations are racist, sexist, or likely to offend attendees. This might not be obvious at first, but slang you use amongst your friends or coworkers can come across much differently to an audience. These ones are easy to weed out.

Many conferences aim to foster a healthy relationship between various aspects of the community (i.e.: researchers, defenders, vendors,) so a talk that is overly negative or discloses an unpatched vulnerability in a product is likely not going to be one that the conference wants to encourage.

On the other hand, some conferences actively cater to the InfoSec tropes of edgy attackers vs defenders and vendors. You might find an otherwise high-quality Blue Team talk rejected from one of those.

Some submissions may appear to skate a fine line on this question, so good ones are explicit about how they will address this concern. For example, mentioning that the vulnerability they are presenting has been disclosed in coordination with the vendor and will be patched by the time the presentation is given.

Common Mistakes

Those are some of the major thoughts going through a reviewer’s mind as they review the conference submissions. Here are a couple of common mistakes that make it hard for a reviewer to judge submissions.

- Is the talk outline short? If so, the reviewer probably doesn’t have enough information to evaluate how well the presentation addresses the four main questions from above. A good outline is usually between 150 to 500 words. See talks 3, 4, and 5 from the screen shot above to see how this looks in practice!

- Does the title, description or outline rely heavily on clichés? If so, the presentation is likely going to lack originality or quality – even if it is for fun and profit.

- Is the talk overly introspective? Talks that focus heavily on the presenter (“My journey to …”) are hard to get right, since attendees usually need to be familiar with the presenter in order for for the talk to have an impact. Many review processes are blind (reviewers don’t know who submitted the session), so this kind of talk is almost impossible to judge.

- Is the talk a minor variation of another talk? Some presenters submit essentially the same talk, but under two or three names or variations. What primarily drives a reviewer’s decision of a talk is the meat, not a bit of subtle word play in the title. They will likely recognize the multiple variations of the talk and select only one – but which one specifically is unpredictable. When votes are tallied, three talks with one vote each are much less likely to be selected than a single talk with three votes.

- Is the submission rife with grammar and spelling errors? I don’t personally pay much attention to this category of mistake, but many reviewers do. If you haven’t spent the effort running your submission through spelling and grammar check, how much effort will you spend on the talk itself?